Whether it was contacting customer service or with a query regarding healthcare, education or some other area, the likelihood is that you will have interacted with a chatbot at some point. While this may have improved the efficiency of the service and enabled you to interact outside of regular business hours, there is a downside.

Typically, chatbots rely solely on text or voice to communicate. But we, as humans, use multiple senses when conversing, and we rarely rely on just one type of input.

And this difference highlights why current chatbots can be problematic. The reliance of chatbots on just voice and text is restrictive and does not cater to people with different abilities or preferences. In today's environment, we should strive to ensure that technology benefits everyone. That's why inclusive design is essential. Inclusive design aims to develop products and services that can help a range of people regardless of their abilities, languages, cultures and other human differences.

In this blog, I want to show you how we can make conversational AI systems like chatbots work better for everyone.

One of the core principles of inclusive design is recognising that people have different abilities or backgrounds. To enable this and still be commercially viable, it is important to focus on making a product or service customisable to match someone’s specific needs and preferences without needing segregated solutions.

With the example of a chatbot, that means giving it the power to interact with people through different modes of communication, such as voice, text, images, videos, and more. This is called multimodality, with modalities referring to how our senses, physical skills, and thinking styles all come together to shape what we expect and can do. Multimodal design interlaces these modalities together seamlessly when crafting experiences. It focuses on understanding what we can detect through our senses, figuring out what information we need to make sense of, and what actions we envisage ourselves taking - all of which depend on the situation.

Multimodal conversational AI lets users chat with systems using different ways to communicate. So, while the main communication styles are linguistic, visual, gestural, spatial, and audio, other options exist. For example, some practitioners are looking into hand motions, where your eyes look, what you’ve said and done before, and how these can be integrated into communication methods.

With multimodal conversational AI, you can pick the best way you want to interact and communicate with systems. This makes the experience of chatting with a system more natural and friendly to everyone. Having the freedom to choose whether to use voice or gestures or whatever makes you comfortable creates the feeling that you are more like communicating with a human being rather than getting information from the system. When you can show it and point to a picture simultaneously, the system can get more clues to better understand what you mean without guessing it from the words alone.

However, some challenges need further work, especially around multimodal conversational reasoning. I think addressing disambiguation, dialogue state tracking, and cross-modal attention is particularly important when creating multimodal conversational AI chatbots accessible to all.

One constant criticism of AI is that it doesn’t always understand context. If someone says ‘bank’, the AI has to figure out from context if they mean a financial institution or the side of a river. To get it right, it is necessary to teach the AI to understand the context and pick the correct meaning. Disambiguating terminologies refers to the process of determining the sense of a term in a particular context and matters a lot for inclusive design since it helps the AI comprehend the different ways people use words or phrases. These techniques are being used in natural language processing and machine learning to train AI so that words become less confusing.

However, these methods could be better and can sometimes be biased. And there are inherent problems resulting from phrases that are open to interpretation. For example, consider the expression, “Time flies like an arrow, but fruit flies love bananas”. This is a classic example of how words with two or more words have the same spelling or pronunciation but different meanings and, therefore, can cause problems.

What if AI could chat like your best friend and know your likes and dislikes and what you wanted? That's what dialogue state tracking aims for, and it works like an AI memory which follows you along in your conversation. Techniques like rule-based models and memory networks help the AI remember what you've told so far and respond in a way that clicks or "works" for you.

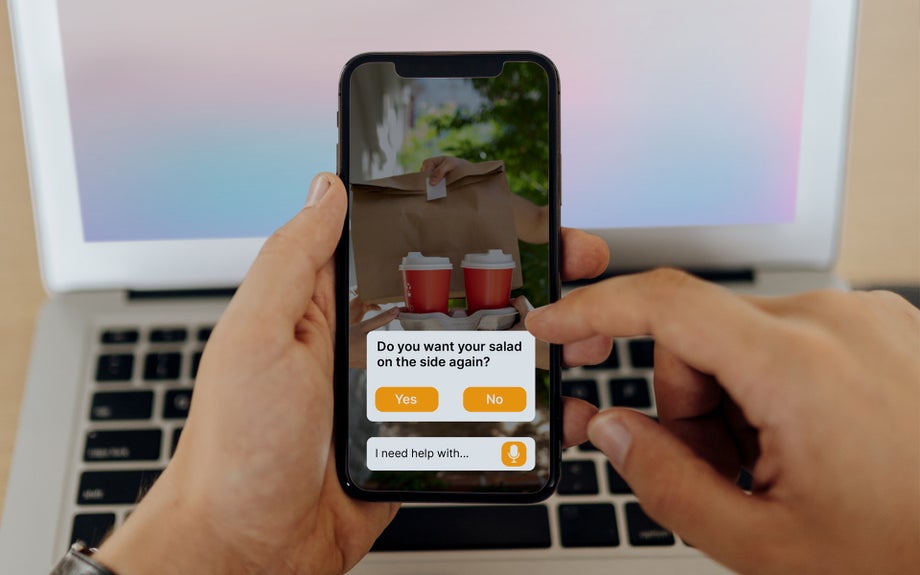

How?

Imagine you are chatting with a chatbot to order food. If you say, "I'd like a pizza with ham and pineapple", it is probably easy for the chatbot to understand what you'd like to achieve. However, you then ask, "I want a salad as well". The chatbot may get confused about whether you want to add a salad as a topping or place another order for a salad. To overcome this problem, chatbots must keep track of the conversation history and its context. At the same time, they accurately recognise what the user is trying to achieve so that they can provide accurate responses.

We'll see some progress in this area relatively soon because researchers keep shaping up these techniques to enable more natural and engaging chats with AI systems. This helps make AI systems less mistake-prone; everyone can have smooth interactions, and no one feels left out.

Lastly, multimodal reasoning is indispensable and assists AI systems in solving complex tasks. Teaching the systems to be as smart as humans is not always easy because they need to have and understand a holistic view of information from different modalities. Cross-modal attention mechanisms are already used for image-text matching tasks, and they have achieved distinct improvements in understanding information gathered via other modalities, e.g. information inputted by text translated into images.

For example, you are learning Spanish through a language app that offers a learning experience via a chatbot. Suppose you’d like to know the word for “apple”, so you type “What’s the word for apple in Spanish?”. The chatbot responds with an image of juicy red and green apples and the sentence, “Esto es una fruta jugosa que es roja o verde”, meaning “This is a juicy fruit that’s red or green” in Spanish. What the chatbot can do now, in this instance, is it can align specific parts of the image with relevant words in the sentence, e.g. when the word “jugosa” (juicy) appears, the attention on the image moves to the apple’s fresh and succulent texture. When the words “roja” (red) or “verde” (green) are displayed, then the focus of the image goes to the apple’s colours. Cross-modal attention can help chatbots combine these visual and text cues, and it helps produce more effective learning experiences for the users because it can act as a bridge and close the gap between words and visuals.

However, there is still room for improvement in getting the AI to pick up fine details and nuances. The current models may sometimes miss subtle connections between information from different modalities because they don’t receive direct supervision or guidance during training. Researchers are investigating how to enable models to produce reasoning similar to humans based on subtle linguistic cues and context.

As organisations continue to leverage AI to improve the customer experience, I think an area with unrealised potential is making chatbots more inclusive and more like you are speaking with a human. If we can expand the way chatbots currently understand and incorporate different communication methods, like text, voice, pictures, and gestures, the overall experience for everyone will improve. Using multimodal communication methods makes the interaction between humans and computers way more natural, engaging and inclusive.

It is a really good use of AI as customer expectations are continually rising, and people are beginning to demand that customer service agents be available 24/7. But few of us enjoy “speaking” with a robot, especially when they often have limited capabilities and can only answer prescribed questions. Imagine how different it would be if you could go online at your convenience and ask any question and get an answer. Better still, say you had a problem, a broken machine or a new purchase that isn’t working. You could show the AI the image of what is broken, and it could offer answers/solutions.

Now, that’s customer service at a whole new level.